Affective Computing: Robots With Empathy

Affective computing is a new research field that’s developed from the intersection of several classical disciplines. Of course, it’s related to computer science, but it’s also linked to psychology and sociology, among other areas. Put simply, affective computing has an extraordinarily ambitious goal: to make machines exhibit empathy, or at least behave as if they possess it.

Affective computing aims to detect, record, and understand human emotions. The goal is to translate emotions into computable terms and develop technology that’s capable of helping us, thanks to a more advanced symbiosis with the human ecosystem.

Affective computing was first mentioned in 1995. Today, we can see it on computers, cell phones, and similar devices. Indeed, the mere fact that a computer asks something and gives the user the option of choosing the path to follow is a materialization of an element of empathy

“Robots will harvest, cook and serve our food. They will work in our factories, drive our cars, and walk our dogs. Like it or not, the age of work is coming to an end.”

-Gray Scott-

Affective computing

The first person to talk about affective computing was Dr. Rosalind Picard, director of the Affective Computer Group at the Media Lab at MIT. In 1995, she published an article in Wired magazine on the subject. However, the text wasn’t taken particularly seriously, as the computer world was more concerned with increasing the power of machines than their ability to understand emotions.

Nevertheless, over time, things have changed. Multiple advances demonstrated that it wasn’t enough to create devices merely capable of performing many functions. It was also important that the users of these devices could appropriate them and incorporate them into their lives without feeling that they were doing ‘something strange’.

Consequently, improvements have been made to machines so they’re now increasingly capable of detecting and interpreting human emotions. It’s not that they understand affectivity, in the strict sense. In reality, they have the ability to ‘read’ the presence of feelings in human beings and take them into account before taking action.

The importance of empathy

Today, devices are being used for many functions that weren’t performed by them before. For example, a device or digital space is often used to attend to customers’ initial complaints. These devices and programs have the ability to guide the user until they find an answer to their needs or are redirected to a human.

This is a simple function that makes use of affective computation. Often, these applications are able to detect the user’s confusion and offer alternatives to clarify what they want to ask and how to take the necessary steps to achieve what they want. Other more sophisticated devices or applications do the same on a larger scale.

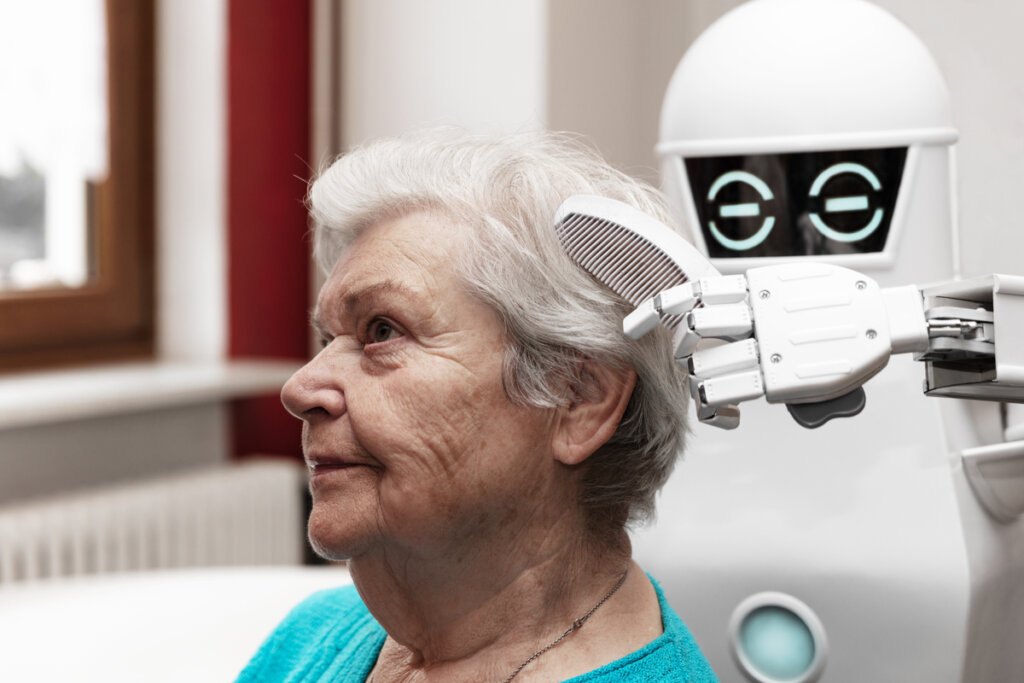

There have also been great advances in robotics. As a matter of fact, robots have long been tested to care for sick people or children. There are also prototypes to perform housework and even to provide company. That said, if it’s all to work well, human subjectivity must be taken into account.

Biometrics

How is affective computing essentially implemented? So far, the machines capture certain physiological signals and respond to them. As humans, we emit certain signals when we experience certain emotions. Other humans perceive them but may not be aware of the fact. However, a machine can detect them directly.

The devices use sensors of different types. For instance, a camera will be able to record facial expressions and process what lies behind them. Indeed, facial expressions have been intensely researched within the field of affective computing. Gestures are interpreted and responded to accordingly.

In addition, there are devices that measure the state of mind of a driver on the road. They capture heart and breathing rate, the pressure exerted on the steering wheel, facial expressions, etc. This allows them to send messages to reduce stress. For instance, suggesting certain music or a specific route.

This style will ultimately end up imposing itself on the machines with which we interact. They’ll have increasingly more ’empathy’, which is essential in areas such as health. That said, we all know that it’s a programmed response and not a natural one, like the kind another human could give us. Will it be enough?

Lead Image Editorial Credit: MikeDotta / Shutterstock.com

All cited sources were thoroughly reviewed by our team to ensure their quality, reliability, currency, and validity. The bibliography of this article was considered reliable and of academic or scientific accuracy.

- Baldassarri Santalucía, S. (2016). Computación afectiva: tecnología y emociones para mejorar la experiencia del usuario. Bit & Byte, 2.

- Bosquez, V., Sanz, C., Baldassarri, S., Ribadeneira, E., Valencia, G., Barragan, R., … & Camacho-Castillo, L. A. (2018). La Computación Afectiva: emociones, tecnologías y su relación con la educación virtual. Revista de Investigación Talentos, 5(1), 94-103.

- Serratosa, F. (2008). La biometría para la identificación de las personas. Universitat Oberta de Catalunya, 8-20.

This text is provided for informational purposes only and does not replace consultation with a professional. If in doubt, consult your specialist.